Unique constraint and unique index existed for number of years now. However, I came across a problem for which I had to choose the right one as a solution to my problem.

I had a table where ReleaseId can only existed once in a table with either cancelled bit ON or OFF.

CREATE TABLE ReleaseState

(

Id int Identity(1,1),

ReleaseId bigint NOT NULL,

IsCancelled bit DEFAULT (0)

)

I first created unique constraint.

ALTER TABLE dbo.ReleaseState ADD CONSTRAINT UQ_ReleaseId UNIQUE (ReleaseId)

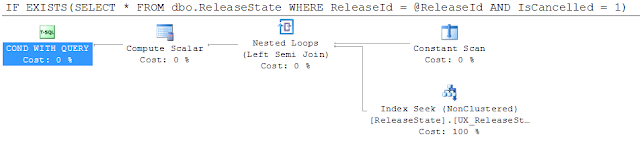

Then I checked the plan for my query:

IF EXISTS (SELECT * FROM dbo.ReleaseState WHERE ReleaseId = @ReleaseId AND IsCancelled = 1)

As you can see, unique constraint has created unique index internally and that is what SQL Server is using for my query. It is doing a seek on unique index and then retrieving full record for lookup of IsCancelled column.

I want to avoid extra lookup into heap. Can I include a column in the unique index? Create constraint SQL command does not provide me any option to do that. That is the main advantage (as opposed to unique constraint) of using unique index as it allows to take advantage of all index options.

So let’s remove the constraint and add unique index and include extra column value.

CREATE UNIQUE INDEX UX_ReleaseState_ReleaseId ON dbo.ReleaseState(ReleaseId) INCLUDE (IsCancelled)

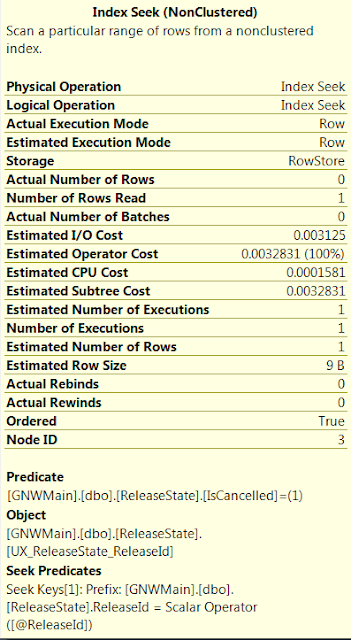

With unique index, I get following query plan.

Looking into seek operation, I see following:

This confirms that only seek operation is sufficient (with the help of index option INCLUDE) to cover my query and I can have SQL Server avoid extra heap look up for IsCancelled column.